Stochastic Model Predictive Control

Robust Model Predictive Control (RMPC) is a powerful methodology to design controller for uncertain systems in which state and input constrains must be satisfied for every possible disturbance realization. In certain situations, however, this requirement may significantly degrade the overall controller performance by the need to protect against low probability outliers. Stochastic Model Predictive Control (SMPC) is a relaxation of RMPC, in which the constraints are interpreted probabilistically via chance constraints, allowing for a (small) constraint violation probability. Unfortunately, chance constrained control problems are hard in general, and must often be approximated. In our work, we focus on sampling-based approximation methods for solving such problems, and derive efficient sample sizes for both linear and non-linear SMPC problems. The methods are numerically validated on energy-efficient building control problems.

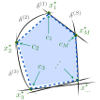

Model Predictive Control (MPC), also known as receding horizon control and rolling horizon control, is a powerful technique employed in diverse engineering applications. MPC is mainly valued for its inherent ability to handle constraints while minimizing some cost (or maximizing some reward). The underlying idea of MPC is to repeatedly approximate an infinite horizon constrained optimal control problem by a finite horizon optimal control problem. At each time step, such a finite horizon problem is solved, and only the first input is applied to the system in a receding horizon fashion.

MPC uses a model of the plant to predict its future behaviour, and then computes an optimal input trajectory based on the predictions. In most real applications, however, exact models can not be obtained, either due to model uncertainty or external disturbances. Uncertainty is most commonly addressed in literature by formulating a Robust MPC problem, where the uncertainty is assumed to be bounded and computes a control law that satisfies the constraints for every possible uncertainty realization. This pessimistic view may significantly degrade the overall controller performance, due to the need to protect against low probability uncertainty outliers.

An alternative, and somehow less pessimistic view on uncertainty, is offered by Stochastic MPC (SMPC). In SMPC, the constraints are interpreted probabilistically, allowing for a small violation probability. In this context, even unbounded uncertainty can be studied. Unfortunately, SMPC problems are hard in general and can only be solved approximately or by imposing specific problem structure. In this context, we mainly focus on the following problems: But what happens if the uncertainty is not bounded? Or when it is not uniformly distributed? A less conservative approach would clearly be to take these possibilities into account, identifying appropriate distributions of the uncertainties and formulating a stochastic MPC problem instead. In this context, there are several points one should take into consideration for the problem formulation:

Probabilistic Constraints:

In the context of unbounded stochastic disturbances, satisfying all constraints with probability one is impossible, as at any time the system might encounter a very big disturbance that forces it to violate its constraints. It is important thus, to substitute the hard constraints with soft, probabilistic ones, ensuring that they are respected with a desired probability.

Expected Value Constraints:

Another alternative for dealing with constraints is to make sure that they are respected on an average for the optimization problem considered. Depending on the nature of the problem such expected value constraints may be more meaningful than the probabilistic ones.

Cost (Reward):

The simplest cost (reward) is perhaps an expected cost (reward), formulated as the expected value of the sum of discounted cost-per-stage functions. Depending on the application, alternative and more difficult formulations may be to consider long-run expected average cost (reward), or long-run pathwise cost (reward).

The goal of this research group is to develop theoretical foundations of SMPC as well as to consider implementation issues, driven by some relevant applications. The focus of our current and past work can be divided in the following areas: