Optimal Control at LARGE

Control theory deals with dynamical systems that can be controlled, i.e., whose evolution can be influenced by some external agent. Optimal control aims at finding a control policy for a dynamical system over a period of time such that an associated reward is maximized. Despite wide ranging progress on both the theory and applications of optimal control, considerable challenges remain when applying the existing methods to large scale systems. To address these challenges, we use optimization tools and methods to develop an approximation framework for optimal control problems: 1) energy management in buildings and districts; 2) population systems.

Optimal control is a mature mathematical discipline with numerous applications in both science and engineering. Despite wide ranging progress on both the theory and applications of optimal control for more than half a century, considerable challenges remain when applying the existing methods to large scale systems. The difficulties become even greater when one moves outside the classical realm of model based optimal control to deducing model-free control policies directly from data, or macroscopic behaviours emerge out of microscopic interactions of large populations of agents.

To address these challenges, we first formulate optimal control problems as infinite dimensional linear programs. Then, by leveraging the recent optimization techniques, we approximately solve the infinite linear problems via our data-driven approach, which admits a close connection to statistical learning theory that enables us to construct explicit accuracy guarantees for the obtained policy. More specifically, we develop randomised LP approximations of optimal control problems, with theoretical performance guarantees. Our approach lends itself to a “batch” formulation. If a data-driven perspective is adopted, then we propose to solve progressively larger optimisation problems, and the developed methods are implemented sequentially, which enables deployment in a “big data” context. The effectiveness and efficiency of the proposed framework are demonstrated on the following two applications.

Energy management in buildings and districts

By efficiently exploiting the interlinked buildings, we use our developed approach to compute the optimal control policy for large scale energy management systems that reduces the environmental footprint of the building stock. The main challenge here is the high dimensionality of the corresponding system due in part to incorporating weather and other forecast information and the associated uncertainty in the system.

Research on this topic is conducted in close collaboration with the Urban Energy Systems Laboratory at Empa external page [link], supported in part also by the SCCER (Swiss Competence Center for Energy Research) Future Energy Efficient Buildings & Districts [external page link] and SNSF (Swiss National Science Foundation) under NCCR Automation [external page link].

Population systems

Population systems involve the interaction of many agents with local decision-making capabilities coupled through the use of common resources. To achieve a desired global system behaviour, we use the developed approach to find an optimal control policy that coordinates the actions of the agents. There are two main challenges that we would like to address here: 1) defining suitable "features" to abstract the individual states; 2) integration of uncertainty due to the presence of non-participating agents.

Research

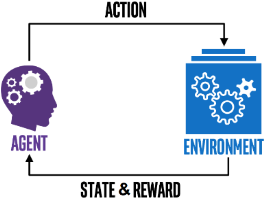

We study infinite-horizon optimal control problems for discrete-time systems when the dynamics and/or the stage costs are unknown. The general approach we have considered consists in exploring the environment and collecting input/output data as well as costs in a reinforcement learning fashion. We then cast the acquired information into an LP formulation that returns the approximated optimal Q-function and/or policy. More specifically, in [6], we introduce a relaxed version of the Bellman operator for Q-functions and prove that it is still a monotone contraction mapping with a unique fixed point. In the spirit of the linear programming approach to approximate dynamic programming, we exploit the new operator to build a simplified linear program (LP) for Q-functions. In the case of discrete time stochastic linear systems with infinite state and action spaces, the solution of the LP preserves the minimizers of the optimal Q-function. Therefore, even though the solution of the LP does not coincide with the optimal Q-function, the policy we retrieve is the optimal one. The LP has fewer decision variables than existing programs, and we show how it can be employed together with reinforcement learning approaches when the dynamics is unknown. Moreover, in many applications one cannot easily specify the cost of a task, but can instead observe an expert behaviour. In [4], we consider Markov decision processes with an unknown cost function and employ stochastic convex optimization tools to address the problem of imitation learning, which consists of learning a policy from a finite set of expert demonstrations. Among other benefits, the proposed approximation schemes provide explicit probabilistic performance bounds on the quality of the recovered solutions.

In [2,9], we advance the recent techniques for model-based control problems. In [9], we derive a family of data-driven iterative optimisation algorithms based on the LP approach, off-policy Q-learning, and randomised experience replay, that compute a near-optimal feedback policy. In [2], we propose a novel approximation scheme for the value function and Q-function formulation of the Linear Programming approach to Approximate Dynamic Programming. The proposed approach optimizes over a restricted function space to approximate the value function or Q-function. Working in the discrete time, continuous space setting, we provide guarantees for the fitting error and online performance of the policy. These guarantees complement the existing bounds that appear in the literature.

Numerically, to deal with large optimization problems arising in data-driven optimal control, we propose the following two approaches. In [8], we exploit the parallel computing architecture of a graphics processing unit to accelerate a state-of-the-art method for solving data-driven optimal control problems, and for many large-scale problems, our implementation is up to two orders of magnitude faster than the conventional CPU implementation. For problems that cannot be efficiently parallelised, in [1], we first solve the control problem with respect to a subset of all data samples and keep only the data samples associated with the binding constraints, then add constraints corresponding to new data samples in the resulting problem and repeat the procedure. This sequential scheme ensures the obtained solution converges to the optimal one.

We apply optimization and machine learning techniques to solve hydro scheduling problems, networked dynamic systems and hybrid control problems in [3,5,7], respectively. In [3], we present an approximate method for solving nonlinear control problems over long time horizons, in which the full nonlinear model is preserved over an initial part of the horizon, while the remainder of the horizon is modelled using a linear relaxation. We propose a Benders decomposition-based solution algorithm, and prove that it converges after a finite number of iterations, even when the nonlinear initial stage problems are solved inexactly. We also bound the sub-optimality of the split-horizon method with respect to the original nonlinear problem. We then apply this method to a multiple reservoir hydro system, and demonstrate its numerical benefits over existing methods. In [5], we consider a sub-class of hybrid systems that are time-invariant and do not have binary states or control inputs. For such systems we develop an algorithm that learns an extended Q-function. Numerical experiments show that our controllers outperform naive implementations of hybrid MPC, without having to choose terminal costs or constraints. In [7], we introduced a new clustering measure, the Degree of Freedom, for networked dynamical systems under disturbance suppression. The Degree of Freedom measure captures the network structural capability of locally confined disturbances within the clusters. Thanks to this measure, we are able to generate optimal graph partitions to minimize the risk of system failure due to abrupt disturbances.

Publications

[1] G. Banjac and J. Lygeros. external page A data-driven policy iteration scheme based on linear programming. IEEE Conference on Decision and Control (CDC), 2019.

[2] P.N. Beuchat, A. Georghiou and J. Lygeros. external page Performance Guarantees for Model-Based Approximate Dynamic Programming in Continuous Spaces. IEEE Transactions on Automatic Control, 65(1):143-158, 2019.

[3] B. Flamm, J. Warrington and J. Lygeros. external page Two-Stage Dual Dynamic Programming with Application to Nonlinear Hydro Scheduling. IEEE Transactions on Control Systems Technology, 2020.

[4] A. Kamoutsi, G. Banjac and J. Lygeros. external page Stochastic convex optimization for provably efficient apprenticeship learning. NeurIPS 2019 Optimization Foundations for Reinforcement Learning Workshop, 2019.

[5] A. Martinelli and J. Lygeros.external page Control of networked systems by clustering: the degree of freedom concept. 21st IFAC World Congress, 2020, in press.

[6] S. Menta, J. Warrington, J. Lygeros, and M. Morari.external page Learning solutions to hybrid control problems using Benders cuts. Proceedings of Machine Learning Research, 120:1-9, 2020.

[7] M. Schubiger, G. Banjac, and J. Lygeros. external page GPU acceleration of ADMM for large-scale quadratic programming. Journal of Parallel and Distributed Computing, 14:55-67, 2020.

[8] A. Tanzanakis and J. Lygeros. external page Data-driven control of unknown systems: a linear programming approach. 21st IFAC World Congress, 2020, in press.

[9] J. Coulson, J. Lygeros, and F. Dörfler. Distributionally robust chance constrained data-enabled predictive control. IEEE Transactions on Automatic Control, 2022.

[10] A. Martinelli, M. Gargiani, and J. Lygeros. Data-driven optimal control with a relaxed linear program, Automatica. vol. 136, p. 110052, 2022.

[11] P. Beuchat, J. Warrington, and J. Lygeros. Accelerated point-wise maximum approach to approximate dynamic programming. IEEE Transactions on Automatic Control, vol. 67, pp. 251-266, January 2022.

[12] S. Menta, J. Warrington, J. Lygeros, and M. Morari. Learning Q-function approximations for hybrid control problems. IEEE Control Systems Letters, vol. 6, pp. 1364-1369, 2022.

[13] B. Gentile, D. Paccagnan, B. Ogunsola, and J. Lygeros. The Nash equilibrium with inertia in population games. IEEE Transactions on Automatic Control, vol. 66, pp. 5742-5755, December 2021.

[14] A. Aboudonia, A. Martinelli, and J. Lygeros. Passivity-based decentralized control for discrete-time large-scale systems. IEEE Control Systems Letters, vol. 5, no. 6, pp. 2072-2077, 2021.

[15] E. Elokda, J. Coulson, P. N. Beuchat, J. Lygeros, and F. Dörfler. external page Data-enabled predictive control for quadcopters. International Journal of Robust and Nonlinear Control, vol. 31, no. 18, pp. 8916-8936, 2021.

[16] G. Banjac and J. Lygeros. On the asymptotic behavior of the Douglas-Rachford and proximal-point algorithms for convex optimization. Optimization Letters, February 2021.

[17] B. Flamm, A. Eichler, J. Warrington, and J. Lygeros. Two-stage dual dynamic programming with application to nonlinear hydro scheduling. IEEE Transactions on Control Systems Technology, vol. 29, pp. 96-107, January 2021.

[18] D. Alpago, F. Dörfler and J. Lygeros. An extended Kalman filter for data-enabled predictive control.

IEEE Control Systems Letters, vol. 4, pp. 994-999, October 2020.

[19] M. Schubiger, G. Banjac, and J. Lygeros. GPU acceleration of ADMM for large-scale quadratic programming. Journal of Parallel and Distributed Computing, vol. 144, pp. 55-67, October 2020.

[20] F. Parise, B. Gentile, and J. Lygeros. A distributed algorithm for almost-Nash equilibria of average aggregative games with coupling constraints. IEEE Transactions on Control of Network Systems, vol. 7, pp. 770-782, June 2020.

People

Professor, Head of Automatic Control Laboratory

-John Lygeros

Postdoc

-Goran Banjac

-Jianzhe Zhen

PhD-candidate

-Angeliki Kamoutsi

-Andrea Martinelli

-Sandeep Menta

-Alexandros Tazanakis

Alumnus

external page -Joe Warrington Senior Scientist, currently Staff Research Engineer at HomeX, UK

Presentations

• John Lygeros, “Data Enabled Predictive Control", Plenary Lecture at the Indian Control Conference (ICC), virtual, December 2021. [external page Video]

• A. Martinelli, M. Gargiani, and J. Lygeros, "Data-driven optimal control via linear programming with artificial constraints", in IEEE Conference on Decision and Control, Austin, Texas, USA, December 13-15, 2021.

• A. Kamoutsi, G. Banjac, and J. Lygeros, "Efficient performance bounds for primal-dual reinforcement learning from demonstrations", in International Conference on Machine Learning (ICML), July 18-24, 2021.

• L. Huang, J. Zhen, J. Lygeros, and F. Dörfler, "Quadratic regularization of data-enabled predictive control: Theory and application to power converter experiments", in IFAC Symposium on System Identification, July 13-16, 2021.

• A. Aboudonia, A. Martinelli, and J. Lygeros, "Passivity-based decentralized control for discrete-time large-scale systems", in American Control Conference, New Orleans, Louisiana, U.S.A., May 24-28, 2021.

• Y. Chen, S. Zou, and J. Lygeros, "Game theoretic stochastic energy coordination under a distributed zeroth-order algorithm", in IFAC World Congress, Berlin, Germany, July 12-17, 2020.

• A. Tanzanakis and J. Lygeros, "Constrained optimal tracking control of unknown systems: A multi-step linear programming approach", in IEEE Conference on Decision and Control, Jeju Island, Republic of Korea, December 14-18, 2020.

• John Lygeros, "Data Enabled Predictive Control: Stochastic systems and implicit dynamic predictors", Invited Talk at Learning for Dynamics and Control (L4DC), virtual, June 2020. [external page Video]

• Goran Banjac, “Operator splitting methods for convex optimization", invited talk at the Carnegie Mellon University, Pittsburgh, PA, USA, March 2020. Joint work with B. Stellato, P. Goulart, A. Bemporad and S. Boyd.

• Angeliki Kamoutsi, “Stochastic convex optimization for provably efficient apprenticeship learning", poster presentation at the NeurIPS Optimization Foundations for Reinforcement Learning Workshop, Vancouver, Canada, December 2019. Joint work with G. Banjac and J. Lygeros.

• Goran Banjac, “A data-driven policy iteration scheme based on linear programming", presentation at the IEEE Conference on Decision and Control (CDC), Nice, France, December 2019. Joint work with J. Lygeros.

• Angeliki Kamoutsi, “Randomized algorithms and PAC bounds for data-driven inverse stochastic optimal control", presentation at the International Conference of Continuous Optimization (ICCOPT), Berlin, Germany, August 2019. Joint work with Tobias Sutter.

• Goran Banjac, “Decentralized resource allocation via dual consensus ADMM", presentation at the American Control Conference (ACC), Philadelphia, PA, USA, July 2019. Joint work with F. Rey, P. Goulart and J. Lygeros.

• John Lygeros, “Optimal control at large", DREAM/CPAR Seminar, U.C. Berkeley, August 2019.

• John Lygeros, “Optimal control at large", Plenary Lecture at the European Control Conference (ECC), Naples, Italy, June 2019. [external page Video]

• Goran Banjac, “Decentralized resource allocation via dual consensus ADMM", presentation at the ABB Corporate Research Center, Baden-Dättwil, Switzerland, January 2019. Joint work with F. Rey, P. Goulart and J. Lygeros.

• John Lygeros, “Approximate dynamic programming through finite dimensional linear programs", invited talk at the Innovation in Predictive Control workshop, IIT Bombay, India, November 2018.

Research supported by